Infiniband Switch

SR-IOV를 운용하기 위해서는 Infiniband Switch의 가상화 지원 기능이 활성화 되어야 함.

[Switch Hostname] > enable

[Switch Hostname] # config terminal

[Switch Hostname] (config) # show ib sm virt

Enabled

참고문서:

Command Line Interface

https://docs.nvidia.com/networking/display/MLNXOSv381000/Command+Line+Interface

Standard mode (default) > "enable" into Enable mode > "configure terminal" into config mode.

또는, /opensm.conf 파일에서, 아래 파라메터 값을 확인

# Virtualization support

# 0: Ignore Virtualization - No virtualization support

# 1: Disable Virtualization - Disable virtualization on all

# Virtualization supporting ports

# 2: Enable Virtualization - Enable (virtualization on all

# Virtualization supporting ports

virt_enabled 0

(config) # show ib sm virt

Ignored

가상화 기능이 비활성화 되어 있는 경우 활성화:

(config) # ib sm virt enable

(config) # configure write

<<Reboot the IB switch with the SM, yes this is disruptive to the IB fabric.>>

>적용을 위해 리부팅이 필요하고, 리부팅 시간 동안 IB 네트워크 단절이 발생함에 적용 및 활성화 전 사전 검토 필요

Install Infiniband Adpt in SR-IOV on VMware

Overview

SR-IOV 구성을 위해 아래 항목들이 수행됨.

- BIOS에서 가상화(Virtualization /SR-IOV) 기능 활성화

- IB HCA firmware에서 SR-IOV 활성화

- MLNX_OFED Driver에서 SR-IOV 활성화

- Virtual Machine (VM)에 SR-IOV를 통해 Network 장치 연결/할당

Hardware and Software Requirements

- A server platform with an SR-IOV-capable motherboard BIOS

- NVIDIA ConnectX®-6 adapter

- Installer Privileges: The installation requires administrator privileges on the target machine

Note. 구성 관련 NVidia IB 구성 가이드 문서를 먼저 검토하기를 권장.

HowTo Configure NVIDIA ConnectX-6 InfiniBand adapter in SR-IOV mode on VMware ESXi 7.0 and above

HowTo Configure NVIDIA ConnectX-5 or ConnectX-6 Adapter in SR-IOV Mode on VMware ESXi 6.7 and 7.0 and above

InfiniBand SR-IOV Setup and Performance Study on vSphere 7.x

https://www.vmware.com/techpapers/2022/vsphere7x-infiniband-sriov-setup-perf.html

SR-IOV 구성

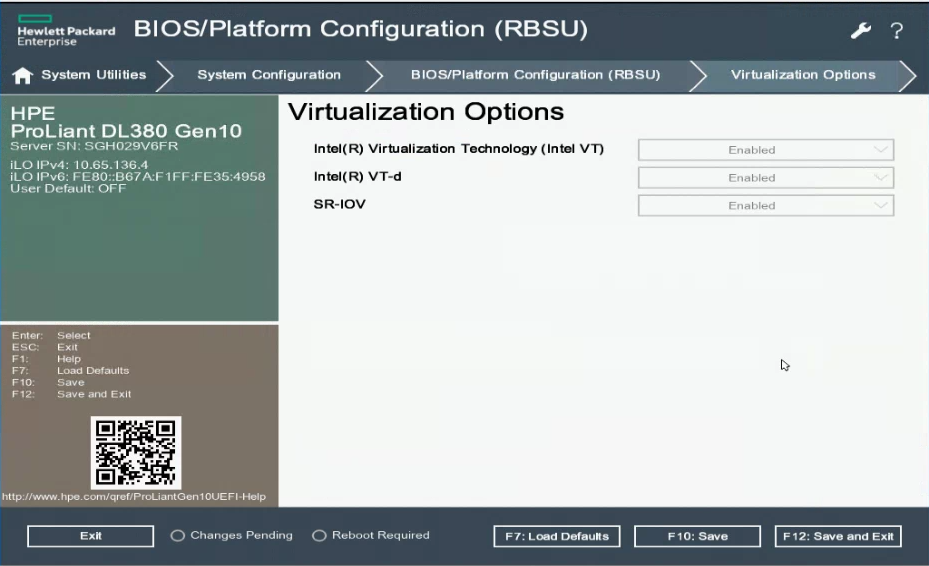

BIOS에서 SR-IOV 활성화 확인

HPE ProLiant Gen10 System에서, Workload Profile을 Virtualization – Max Performance로 설정

From the System Utilities screen, select System Configuration > BIOS/Platform Configuration (RBSU) > Workload Profile > select Virtualization – Max Performance

Note. Intel VT / Intel VT-d / SR-IOV 기본 활성화

필요에 의해, 다른 Workload Profile을 사용하거나, 해당 옵션의 확인이 필요한 경우:

From the System Utilities screen, select System Configuration > BIOS/Platform Configuration (RBSU) > System Options > Virtualization Options > SR-IOV/Intel VT/Intel VT-d > select Enabled

VMware ESXi Server 7.0 u3 설치

Note. VMware ESXi 설치는 본 문서에서 생략/제외.

Note. 문서 작성에 사용된 버전: VMware-ESXi-7.0.3-19193900-HPE-703.0.0.10.8.1.3-Jan2022

HPE Customized ESXi Image

https://www.hpe.com/us/en/servers/hpe-esxi.html

Note. VCSA(vCenter) 설치는 본 문서에서 생략/제외 – Optional, 구성에 직접 연관되지 않음

Note. 아래 작업은 ESXi Host에서 진행

Install OFED ESXi Eth Driver

참고문서:

HowTo Upgrade NVIDIA ConnectX Driver on VMware ESXi 6.7 and 7.0 and above

VMware ESXi 7.0 U2 nmlx5_core 4.21.71.101 Driver CD for Mellanox ConnectX-4/5/6 Ethernet Adapters

4.21.71.101

# lspci | grep Mellanox

0000:37:00.0 Infiniband controller: Mellanox Technologies MT28908 Family [ConnectX-6] [vmnic4]

0000:37:00.1 Infiniband controller: Mellanox Technologies MT28908 Family [ConnectX-6] [vmnic5]

# esxcli software vib list | grep nmlx

nmlx4-core 3.19.70.1-1OEM.670.0.0.8169922 MEL VMwareCertified 2023-06-30

nmlx4-en 3.19.70.1-1OEM.670.0.0.8169922 MEL VMwareCertified 2023-06-30

nmlx4-rdma 3.19.70.1-1OEM.670.0.0.8169922 MEL VMwareCertified 2023-06-30

nmlx5-core 4.21.71.101-1OEM.702.0.0.17630552 MEL VMwareCertified 2023-06-30

nmlx5-rdma 4.21.71.101-1OEM.702.0.0.17630552 MEL VMwareCertified 2023-06-30

Note. VMware-ESXi-7.0.3-19193900-HPE-703.0.0.10.8.1.3-Jan2022 버전에는 “4.21.71.101” 버전이 포함되어 있음

> 위 경로에서 드라이버를 다운로드 받아 압축을 해제 후, scp 툴을 통해 ESXi Host /tmp 디렉토리에 업로드.

# esxcli software vib install –d <path>/<bundle_file>

# esxcli software vib install -d /tmp/Mellanox-nmlx5_4.21.71.101-1OEM.702.0.0.17630552_18117880.zip

Installation Result

Message: The update completed successfully, but the system needs to be rebooted for the changes to be effective.

Reboot Required: true

VIBs Installed: MEL_bootbank_nmlx5-core_4.21.71.101-1OEM.702.0.0.17630552, MEL_bootbank_nmlx5-rdma_4.21.71.101-1OEM.702.0.0.17630552

VIBs Removed: MEL_bootbank_nmlx5-core_4.22.73.1004-1OEM.703.0.0.18644231, MEL_bootbank_nmlx5-rdma_4.22.73.1004-1OEM.703.0.0.18644231

VIBs Skipped:

사용하지 않는 드라이버 제거:

# esxcli software vib remove -n nmlx4-en

# esxcli software vib remove -n nmlx4-rdma

# esxcli software vib remove -n nmlx4-core

적용을 위해 재시작

> reboot ESXi Host.

Install MFT Tool

참고문서:

HowTo Install NVIDIA Firmware Tools (MFT) on VMware ESXi 6.7 and 7.0

https://network.nvidia.com/products/adapter-software/firmware-tools/

> 위 경로에서 툴들을 다운로드 받아 압축을 해제 후, scp 툴을 통해 ESXi Host /tmp 디렉토리에 업로드.

# esxcli software vib install –d /tmp/Mellanox-MFT-Tools_4.24.0.72-1OEM.703.0.0.18644231_21704093.zip

Installation Result

Message: The update completed successfully, but the system needs to be rebooted for the changes to be effective.

Reboot Required: true

VIBs Installed: MEL_bootbank_mft-oem_4.24.0.72-0, MEL_bootbank_mft_4.24.0.72-0

VIBs Removed:

VIBs Skipped:

# esxcli software vib install –d /tmp/Mellanox-NATIVE-NMST_4.24.0.72-1OEM.703.0.0.18644231_21734314.zip

Installation Result

Message: The update completed successfully, but the system needs to be rebooted for the changes to be effective.

Reboot Required: true

VIBs Installed: MEL_bootbank_nmst_4.24.0.72-1OEM.703.0.0.18644231

VIBs Removed: MEL_bootbank_nmst_4.14.3.3-1OEM.700.1.0.15525992

VIBs Skipped:

적용을 위해 재시작

> reboot ESXi Host.

Note. 명령 수행 시, 전체 경로를 입력하지 않고자 한다면, PATH 설정이 필요. (Optional)

export PATH=$PATH:/opt/mellanox/bin

Firmware에서, SR-IOV 활성화

# /opt/mellanox/bin/mst start

Module mst is already loaded

# /opt/mellanox/bin/mst status

MST devices:

------------

mt4123_pciconf0

# /opt/mellanox/bin/mst status -vv

PCI devices:

------------

DEVICE_TYPE MST PCI RDMA NET NUMA

ConnectX6(rev:0) mt4123_pciconf0 37:00.0

ConnectX6(rev:0) mt4123_pciconf0.1 37:00.1

Note. mst 명령에 기대한 결과가 출력되지 않는 경우, MLNX OFED driver가 정상 설치/로드 되지 못한 경우일 수 있음.

장치 설정 상태 확인:

# /opt/mellanox/bin/mlxconfig -d mt4123_pciconf0 q

Device #1:

----------

Device type: ConnectX6

Name: MCX653106A-ECA_HPE_Ax

Description: HPE InfiniBand HDR100/Ethernet 100Gb 2-port MCX653106A-ECAT QSFP56 x16 Adapter

Device: mt4123_pciconf0

Configurations: Next Boot

...

NUM_OF_VFS 0

NUM_OF_PF 2

SRIOV_EN False(0)

...

대상 장치(전체 또는 일부 포트)의 link type을 Infiniband로 지정/설정:

# /opt/mellanox/bin/mlxconfig -d mt4123_pciconf0 set LINK_TYPE_P1=1

Device #1:

----------

Device type: ConnectX6

Name: MCX653106A-ECA_HPE_Ax

Description: HPE InfiniBand HDR100/Ethernet 100Gb 2-port MCX653106A-ECAT QSFP56 x16 Adapter

Device: mt4123_pciconf0

Configurations: Next Boot New

LINK_TYPE_P1 IB(1) IB(1)

Apply new Configuration? (y/n) [n] : y

Applying... Done!

-I- Please reboot machine to load new configurations.

# /opt/mellanox/bin/mlxconfig -d mt4123_pciconf0 set LINK_TYPE_P2=1

Device #1:

----------

Device type: ConnectX6

Name: MCX653106A-ECA_HPE_Ax

Description: HPE InfiniBand HDR100/Ethernet 100Gb 2-port MCX653106A-ECAT QSFP56 x16 Adapter

Device: mt4123_pciconf0

Configurations: Next Boot New

LINK_TYPE_P2 IB(1) IB(1)

Apply new Configuration? (y/n) [n] : y

Applying... Done!

-I- Please reboot machine to load new configurations.

Ethernet PCI subclass override:

# /opt/mellanox/bin/mlxconfig -d mt4123_pciconf0 set ADVANCED_PCI_SETTINGS=1

Device #1:

----------

Device type: ConnectX6

Name: MCX653106A-ECA_HPE_Ax

Description: HPE InfiniBand HDR100/Ethernet 100Gb 2-port MCX653106A-ECAT QSFP56 x16 Adapter

Device: mt4123_pciconf0

Configurations: Next Boot New

ADVANCED_PCI_SETTINGS False(0) True(1)

Apply new Configuration? (y/n) [n] : y

Applying... Done!

-I- Please reboot machine to load new configurations.

# /opt/mellanox/bin/mlxconfig -d mt4123_pciconf0 set FORCE_ETH_PCI_SUBCLASS=1

Device #1:

----------

Device type: ConnectX6

Name: MCX653106A-ECA_HPE_Ax

Description: HPE InfiniBand HDR100/Ethernet 100Gb 2-port MCX653106A-ECAT QSFP56 x16 Adapter

Device: mt4123_pciconf0

Configurations: Next Boot New

FORCE_ETH_PCI_SUBCLASS False(0) True(1)

Apply new Configuration? (y/n) [n] : y

Applying... Done!

-I- Please reboot machine to load new configurations.

적용을 위해 재시작

> reboot ESXi Host.

SR-IOV 활성화 및 필요한 가상 장치 설정(the desired number of Virtual Functions (VFs)):

a. SRIOV_EN=1

b. NUM_OF_VFS=16 ; This is an example with eight VFs per port.

# /opt/mellanox/bin/mlxconfig -d mt4123_pciconf0 s SRIOV_EN=1 NUM_OF_VFS=16

Device #1:

----------

Device type: ConnectX6

Name: MCX653106A-ECA_HPE_Ax

Description: HPE InfiniBand HDR100/Ethernet 100Gb 2-port MCX653106A-ECAT QSFP56 x16 Adapter

Device: mt4123_pciconf0

Configurations: Next Boot New

SRIOV_EN False(0) True(1)

NUM_OF_VFS 0 16

Apply new Configuration? (y/n) [n] : y

Applying... Done!

-I- Please reboot machine to load new configurations.

적용을 위해 재시작

> reboot ESXi Host.

SR-IOV 상태 확인:

# /opt/mellanox/bin/mlxconfig -d mt4123_pciconf0 q

Device #1:

----------

Device type: ConnectX6

Name: MCX653106A-ECA_HPE_Ax

Description: HPE InfiniBand HDR100/Ethernet 100Gb 2-port MCX653106A-ECAT QSFP56 x16 Adapter

Device: mt4123_pciconf0

Configurations: Next Boot

...

NUM_OF_VFS 16

NUM_OF_PF 2

SRIOV_EN True(1)

...

Driver에서, SR-IOV 활성화

모듈의 설정 가능 값 검토:

# esxcli system module parameters list -m nmlx5_core

SR-IOV 활성화 및 가상 장치 설정

# esxcli system module parameters set -m nmlx5_core -p "max_vfs=16,16"

적용을 위해 재시작

> reboot ESXi Host.

# esxcli network nic list

Name PCI Device Driver Admin Status Link Status Speed Duplex MAC Address MTU Description

------ ------------ ---------- ------------ ----------- ----- ------ ----------------- ---- -----------

vmnic0 0000:02:00.0 ntg3 Up Up 1000 Full b4:7a:f1:35:49:5a 1500 Broadcom Corporation NetXtreme BCM5719 Gigabit Ethernet

vmnic1 0000:02:00.1 ntg3 Up Down 0 Half b4:7a:f1:35:49:5b 1500 Broadcom Corporation NetXtreme BCM5719 Gigabit Ethernet

vmnic2 0000:02:00.2 ntg3 Up Down 0 Half b4:7a:f1:35:49:5c 1500 Broadcom Corporation NetXtreme BCM5719 Gigabit Ethernet

vmnic3 0000:02:00.3 ntg3 Up Down 0 Half b4:7a:f1:35:49:5d 1500 Broadcom Corporation NetXtreme BCM5719 Gigabit Ethernet

vmnic4 0000:37:00.0 nmlx5_core Up Down 0 Half 94:40:c9:a5:75:a8 1500 Mellanox Technologies MT28908 Family [ConnectX-6]

vmnic5 0000:37:00.1 nmlx5_core Up Down 0 Half 94:40:c9:a5:75:a9 1500 Mellanox Technologies MT28908 Family [ConnectX-6]

vmnic6 0000:13:00.0 bnxtnet Up Down 0 Half f4:03:43:d4:a4:80 1500 Broadcom BCM57414 NetXtreme-E 10Gb/25Gb RDMA Ethernet Controller

vmnic7 0000:13:00.1 bnxtnet Up Down 0 Half f4:03:43:d4:a4:88 1500 Broadcom BCM57414 NetXtreme-E 10Gb/25Gb RDMA Ethernet Controller

# lspci -d | grep Mellanox

0000:37:00.0 Ethernet controller: Mellanox Technologies MT28908 Family [ConnectX-6] [vmnic4]

0000:37:00.1 Ethernet controller: Mellanox Technologies MT28908 Family [ConnectX-6] [vmnic5]

0000:37:00.2 Ethernet controller: Mellanox Technologies MT28908 Family [ConnectX-6 Virtual Function] [PF_0.55.0_VF_0]

0000:37:00.3 Ethernet controller: Mellanox Technologies MT28908 Family [ConnectX-6 Virtual Function] [PF_0.55.0_VF_1]

0000:37:00.4 Ethernet controller: Mellanox Technologies MT28908 Family [ConnectX-6 Virtual Function] [PF_0.55.0_VF_2]

0000:37:00.5 Ethernet controller: Mellanox Technologies MT28908 Family [ConnectX-6 Virtual Function] [PF_0.55.0_VF_3]

0000:37:00.6 Ethernet controller: Mellanox Technologies MT28908 Family [ConnectX-6 Virtual Function] [PF_0.55.0_VF_4]

0000:37:00.7 Ethernet controller: Mellanox Technologies MT28908 Family [ConnectX-6 Virtual Function] [PF_0.55.0_VF_5]

0000:37:01.0 Ethernet controller: Mellanox Technologies MT28908 Family [ConnectX-6 Virtual Function] [PF_0.55.0_VF_6]

0000:37:01.1 Ethernet controller: Mellanox Technologies MT28908 Family [ConnectX-6 Virtual Function] [PF_0.55.0_VF_7]

0000:37:01.2 Ethernet controller: Mellanox Technologies MT28908 Family [ConnectX-6 Virtual Function] [PF_0.55.0_VF_8]

0000:37:01.3 Ethernet controller: Mellanox Technologies MT28908 Family [ConnectX-6 Virtual Function] [PF_0.55.0_VF_9]

0000:37:01.4 Ethernet controller: Mellanox Technologies MT28908 Family [ConnectX-6 Virtual Function] [PF_0.55.0_VF_10]

0000:37:01.5 Ethernet controller: Mellanox Technologies MT28908 Family [ConnectX-6 Virtual Function] [PF_0.55.0_VF_11]

0000:37:01.6 Ethernet controller: Mellanox Technologies MT28908 Family [ConnectX-6 Virtual Function] [PF_0.55.0_VF_12]

0000:37:01.7 Ethernet controller: Mellanox Technologies MT28908 Family [ConnectX-6 Virtual Function] [PF_0.55.0_VF_13]

0000:37:02.0 Ethernet controller: Mellanox Technologies MT28908 Family [ConnectX-6 Virtual Function] [PF_0.55.0_VF_14]

0000:37:02.1 Ethernet controller: Mellanox Technologies MT28908 Family [ConnectX-6 Virtual Function] [PF_0.55.0_VF_15]

0000:37:02.2 Ethernet controller: Mellanox Technologies MT28908 Family [ConnectX-6 Virtual Function] [PF_0.55.1_VF_0]

0000:37:02.3 Ethernet controller: Mellanox Technologies MT28908 Family [ConnectX-6 Virtual Function] [PF_0.55.1_VF_1]

0000:37:02.4 Ethernet controller: Mellanox Technologies MT28908 Family [ConnectX-6 Virtual Function] [PF_0.55.1_VF_2]

0000:37:02.5 Ethernet controller: Mellanox Technologies MT28908 Family [ConnectX-6 Virtual Function] [PF_0.55.1_VF_3]

0000:37:02.6 Ethernet controller: Mellanox Technologies MT28908 Family [ConnectX-6 Virtual Function] [PF_0.55.1_VF_4]

0000:37:02.7 Ethernet controller: Mellanox Technologies MT28908 Family [ConnectX-6 Virtual Function] [PF_0.55.1_VF_5]

0000:37:03.0 Ethernet controller: Mellanox Technologies MT28908 Family [ConnectX-6 Virtual Function] [PF_0.55.1_VF_6]

0000:37:03.1 Ethernet controller: Mellanox Technologies MT28908 Family [ConnectX-6 Virtual Function] [PF_0.55.1_VF_7]

0000:37:03.2 Ethernet controller: Mellanox Technologies MT28908 Family [ConnectX-6 Virtual Function] [PF_0.55.1_VF_8]

0000:37:03.3 Ethernet controller: Mellanox Technologies MT28908 Family [ConnectX-6 Virtual Function] [PF_0.55.1_VF_9]

0000:37:03.4 Ethernet controller: Mellanox Technologies MT28908 Family [ConnectX-6 Virtual Function] [PF_0.55.1_VF_10]

0000:37:03.5 Ethernet controller: Mellanox Technologies MT28908 Family [ConnectX-6 Virtual Function] [PF_0.55.1_VF_11]

0000:37:03.6 Ethernet controller: Mellanox Technologies MT28908 Family [ConnectX-6 Virtual Function] [PF_0.55.1_VF_12]

0000:37:03.7 Ethernet controller: Mellanox Technologies MT28908 Family [ConnectX-6 Virtual Function] [PF_0.55.1_VF_13]

0000:37:04.0 Ethernet controller: Mellanox Technologies MT28908 Family [ConnectX-6 Virtual Function] [PF_0.55.1_VF_14]

0000:37:04.1 Ethernet controller: Mellanox Technologies MT28908 Family [ConnectX-6 Virtual Function] [PF_0.55.1_VF_15]

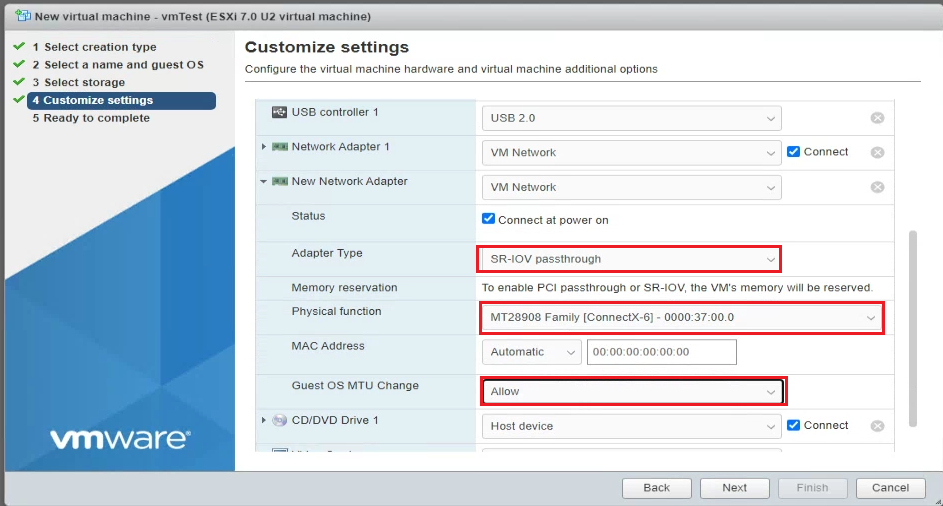

VM에 SR-IOV Mode로 Network Adapter 할당

Note. SR-IOV를 통한 IB통신을 위해, VMware 상에서, 별도의 가상 네트워크 및 Port Group 생성을 권장.

참고. Virtual Switch:

참고. Port Group:

a. 신규 VM 생성 또는 기존 VM 사용 시, VM 전원 종료

b. "Add Network Adapter”를 선택하여(Optional), Adapter를 구성

- Adapter Type: SR-IOV passthrough

- Physical function: MLNX HCA

- Guest OS MTU Change: Allow

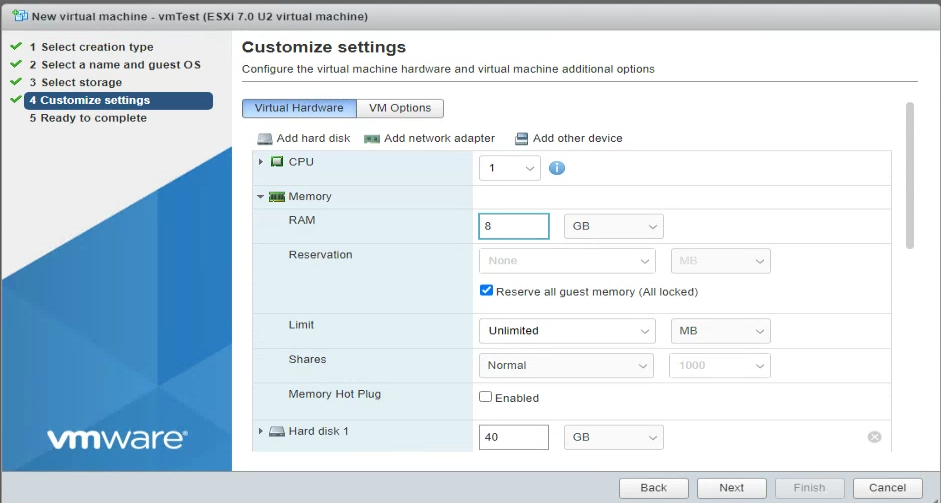

c. Reserve all guest memory (All locked) 선택

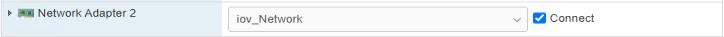

d. IB 통신을 위해 생성한 Network Port Group을 선택 및 Connected 선택 (항목 b.의 “VM Network” 항목)

e. VM을 기동하여, OS 설치 또는 부팅

f. VM Guest OS에 OFED driver 설치

참고. RHEL Guest Install

1. Install RHEL 8.7 - Server with GUI

2. prerequisite

# mkdir -p /media/odd

# mount -t iso9660 -o loop /tmp/RHEL-8.7.0-20221013.1-x86_64-dvd1.iso /media/odd

or # mount -o loop /dev/cdrom /media/odd

# vim /etc/yum.repos.d/odd.repo

[RHEL8DVD_BaseOS]

name=RHEL8DVD_BaseOS

baseurl=file:///media/odd/BaseOS/

enabled=1

gpgcheck=1

gpgkey=file:///etc/pki/rpm-gpg/RPM-GPG-KEY-redhat-release

[RHEL8DVD_AppStream]

name=RHEL8DVD_AppStream

baseurl=file:///media/odd/AppStream/

enabled=1

gpgcheck=1

gpgkey=file:///etc/pki/rpm-gpg/RPM-GPG-KEY-redhat-release

# dnf -y groupinstall "Development Tools"

# dnf -y install kernel-devel-$(uname -r) kernel-headers-$(uname -r)

# dnf -y install createrepo python36-devel kernel-rpm-macros gcc-gfortran tk kernel-modules-extra tcl mstflint

방화벽 비활성화:

# systemctl status firewalld

# systemctl stop firewalld

3. Install OFED

https://docs.nvidia.com/networking/display/MLNXOFEDv461000/Installing+Mellanox+OFED

# tar -xvzf /tmp/MLNX_OFED_LINUX-23.04-1.1.3.0-rhel8.7-x86_64.tgz

# cd /tmp/MLNX_OFED_LINUX-23.04-1.1.3.0-rhel8.7-x86_64

For unattended installation, use the --force installation option while running the MLNX_OFED installation script:

# ./mlnxofedinstall --add-kernel-support --force

# dracut -f

# reboot

4. IB 장치의 동작 확인

# lspci |grep mellanox -i

0b:00.0 Ethernet controller: Mellanox Technologies MT28908 Family [ConnectX-6 Virtual Function]

# lsmod | grep -e ib_ -e mlx_

ib_ipoib 155648 0

ib_cm 118784 2 rdma_cm,ib_ipoib

ib_umad 28672 0

ib_uverbs 143360 2 rdma_ucm,mlx5_ib

ib_core 442368 8 rdma_cm,ib_ipoib,iw_cm,ib_umad,rdma_ucm,ib_uverbs,mlx5_ib,ib_cm

mlx_compat 16384 11 rdma_cm,ib_ipoib,mlxdevm,iw_cm,ib_umad,ib_core,rdma_ucm,ib_uverbs,mlx5_ib,ib_cm,mlx5_core

nft_fib_inet 16384 1

nft_fib_ipv4 16384 1 nft_fib_inet

nft_fib_ipv6 16384 1 nft_fib_inet

nft_fib 16384 3 nft_fib_ipv6,nft_fib_ipv4,nft_fib_inet

nf_tables 180224 456 nft_ct,nft_compat,nft_reject_inet,nft_fib_ipv6,nft_objref,nft_fib_ipv4,nft_counter,nft_chain_nat,nf_tables_set,nft_reject,nft_fib,nft_fib_inet

# modinfo mlx5_core | grep -i version | head -1

version: 23.04-1.1.3

# ibstat -l

mlx5_0

ibv_devinfo | grep -e "hca_id\|state\|link_layer"

hca_id: mlx5_0

state: PORT_DOWN (1)

link_layer: InfiniBand

# ibstat

CA 'mlx5_0'

CA type: MT4124

Number of ports: 1

Firmware version: 20.32.1010

Hardware version: 0

Node GUID: 0x000c29fffe16fd59

System image GUID: 0x9440c9ffffa575a8

Port 1:

State: Down

Physical state: Polling

Rate: 10

Base lid: 65535

LMC: 0

SM lid: 0

Capability mask: 0x2651ec48

Port GUID: 0x000c29fffe16fd59

Link layer: InfiniBand

# mst start

Starting MST (Mellanox Software Tools) driver set

Loading MST PCI modulemodprobe: ERROR: could not insert 'mst_pci': Required key not available

- Failure: 1

Loading MST PCI configuration modulemodprobe: ERROR: could not insert 'mst_pciconf': Required key not available

- Failure: 1

Create devices

mst_pci driver not found

Unloading MST PCI module (unused) - Success

Unloading MST PCI configuration module (unused) - Success

# /etc/init.d/openibd restart

Unloading HCA driver: [ OK ]

Loading Mellanox MLX5_IB HCA driver: [FAILED]

Loading Mellanox MLX5 HCA driver: [FAILED]

Loading HCA driver and Access Layer: [FAILED]

Please run /usr/sbin/sysinfo-snapshot.py to collect the debug information

and open an issue in the http://support.mellanox.com/SupportWeb/service_center/SelfService

5. 오류 검토 결과, Secure Boot 활성화 시, 발생이 의심되는 외부 자료가 확인되어 VM의 Secure Boot 기능 비활성화.

Enable or Disable UEFI Secure Boot for a Virtual Machine.

Note. Uninstall OFED and re-install OFED.

6. IB 장치의 동작 확인

# ibdev2netdev

mlx5_0 port 1 ==> ib0 (Up)

# ibstat

CA 'mlx5_0'

CA type: MT4124

Number of ports: 1

Firmware version: 20.32.1010

Hardware version: 0

Node GUID: 0x000c29fffe16fd59

System image GUID: 0x9440c9ffffa575a8

Port 1:

State: Active

Physical state: LinkUp

Rate: 100

Base lid: 7

LMC: 0

SM lid: 1

Capability mask: 0x2651ec48

Port GUID: 0x000c29fffe16fd59

Link layer: InfiniBand

# /etc/init.d/openibd status

HCA driver loaded

Configured IPoIB devices:

ib0

Currently active IPoIB devices:

ib0

Configured Mellanox EN devices:

Currently active Mellanox devices:

ib0

The following OFED modules are loaded:

rdma_ucm

rdma_cm

ib_ipoib

mlx5_core

mlx5_ib

ib_uverbs

ib_umad

ib_cm

ib_core

mlxfw

# ip a s

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: ens224: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc fq_codel state UP group default qlen 1000

link/ether 00:0c:29:16:fd:f4 brd ff:ff:ff:ff:ff:ff

altname enp19s0

inet 192.168.0.11/21 brd 10.55.143.255 scope global dynamic noprefixroute ens224

valid_lft 21678sec preferred_lft 21678sec

inet6 fe80::20c:29ff:fe16:fdf4/64 scope link noprefixroute

valid_lft forever preferred_lft forever

3: ib0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 2044 qdisc mq state UP group default qlen 256

link/infiniband 00:00:00:e9:fe:80:00:00:00:00:00:00:00:0c:29:ff:fe:16:fd:59 brd 00:ff:ff:ff:ff:12:40:1b:ff:ff:00:00:00:00:00:00:ff:ff:ff:ff

4: virbr0: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu 1500 qdisc noqueue state DOWN group default qlen 1000

link/ether 52:54:00:b3:8a:9d brd ff:ff:ff:ff:ff:ff

inet 192.168.122.1/24 brd 192.168.122.255 scope global virbr0

valid_lft forever preferred_lft forever

# cat /sys/class/infiniband/mlx5*/fw_ver

20.32.1010

# ibv_devinfo | grep -e "hca_id\|state\|link_layer"

hca_id: mlx5_0

state: PORT_ACTIVE (4)

link_layer: InfiniBand

Note. VM상에서, 여러 IB command가 동작하지 않음 - 가상화 환경의 제약으로 예상/판단됨

# ibnetdiscover

ibwarn: [3138] _do_madrpc: send failed; Invalid argument

ibwarn: [3138] mad_rpc: _do_madrpc failed; dport (DR path slid 0; dlid 0; 0)

/var/tmp/OFED_topdir/BUILD/rdma-core-2304mlnx44/libibnetdisc/ibnetdisc.c:811; Failed to resolve self

ibnetdiscover: iberror: failed: discover failed

# sminfo

ibwarn: [3249] _do_madrpc: send failed; Operation not permitted

ibwarn: [3249] mad_rpc: _do_madrpc failed; dport (Lid 1)

sminfo: iberror: failed: query

# ibhosts

ibwarn: [3367] _do_madrpc: send failed; Invalid argument

ibwarn: [3367] mad_rpc: _do_madrpc failed; dport (DR path slid 0; dlid 0; 0)

/var/tmp/OFED_topdir/BUILD/rdma-core-2304mlnx44/libibnetdisc/ibnetdisc.c:811; Failed to resolve self

/usr/sbin/ibnetdiscover: iberror: failed: discover failed

# iblinkinfo

ibwarn: [3378] _do_madrpc: send failed; Invalid argument

ibwarn: [3378] mad_rpc: _do_madrpc failed; dport (DR path slid 0; dlid 0; 0)

/var/tmp/OFED_topdir/BUILD/rdma-core-2304mlnx44/libibnetdisc/ibnetdisc.c:811; Failed to resolve self

discover failed

IB 통신 점검

Linux Guest VM:

# ib_send_bw -a -d mlx5_0 -n 1000 --report_gbit --report-both -F

************************************

* Waiting for client to connect... *

************************************

---------------------------------------------------------------------------------------

Send BW Test

Dual-port : OFF Device : mlx5_0

Number of qps : 1 Transport type : IB

Connection type : RC Using SRQ : OFF

PCIe relax order: ON

ibv_wr* API : ON

RX depth : 512

CQ Moderation : 100

Mtu : 4096[B]

Link type : IB

Max inline data : 0[B]

rdma_cm QPs : OFF

Data ex. method : Ethernet

---------------------------------------------------------------------------------------

local address: LID 0x07 QPN 0x00c7 PSN 0x9d79e

remote address: LID 0x08 QPN 0x0047 PSN 0xd3598f

---------------------------------------------------------------------------------------

#bytes #iterations BW peak[Gb/sec] BW average[Gb/sec] MsgRate[Mpps]

2 1000 0.000000 0.060824 3.801478

...

8388608 1000 0.00 96.68 0.001441

---------------------------------------------------------------------------------------

Linux Host

# ib_send_bw -a -d mlx5_0 -n 1000 <%Guest_VM-IP_addr%> --report_gbit --report-both -F

---------------------------------------------------------------------------------------

Send BW Test

Dual-port : OFF Device : mlx5_0

Number of qps : 1 Transport type : IB

Connection type : RC Using SRQ : OFF

PCIe relax order: ON

ibv_wr* API : ON

TX depth : 128

CQ Moderation : 100

Mtu : 4096[B]

Link type : IB

Max inline data : 0[B]

rdma_cm QPs : OFF

Data ex. method : Ethernet

---------------------------------------------------------------------------------------

local address: LID 0x08 QPN 0x0047 PSN 0xd3598f

remote address: LID 0x07 QPN 0x00c7 PSN 0x9d79e

---------------------------------------------------------------------------------------

#bytes #iterations BW peak[Gb/sec] BW average[Gb/sec] MsgRate[Mpps]

2 1000 0.084211 0.059216 3.700986

...

8388608 1000 96.81 96.81 0.001443

---------------------------------------------------------------------------------------